Compressors And Decompressors Of Our Future Communications

Or, "Why expert systems and LLNs won't help you as much as you think they will."

As you all know, the most important part of this Substack is that I, in the words of Robert Ringer, “earn, and receive, income” — but I can’t help but be thrilled by the number of “Spinoff Stacks” started by my readers that have come into existence since last July. Even better, I frequently see conversations among my subscribers in the spinoffs.

The latest such spinoff comes from subscriber and First Principles attendee Spaniel Felson; his second article is “The Machines Will Not Speak For Me”. Spaniel is an awfully serious and thoughtful fellow who writes prose the way he likely writes computer programs, which is to say information-dense and oriented towards raw function. Spaniel doesn’t give you a bit of the old ultra-violence, or even a bit of the old ultra-adultery, to leaven the mood between challenging propositions. So you gotta be a grownup to read it. I am not quite a grownup, but I was struck by the following paragraph:

And so, what will come of this? If everything I read is primarily a fluffed-up prompt from a machine, what impetus have I to read it? First come the LLM-based writing assistants; then come the LLM-based “summarizers”, to deserialize LLM-based text back into a sort of prompt form - we’ll need this technology, no doubt, for as the effort required to create a given piece of communication drops to “approaching zero”, the volume of said communication will increase proportionally, but the requirement that I act on “all of it” will remain constant. All of this, and for… what? I suppose that one could begin to craft additional LLM-based “assistants”, that can respond to other LLMs on my behalf, calling to my attention only what’s most urgent. (This is a weak line of reasoning, to be fair, but it was obvious and I’m convinced that it, or something else equally as obvious, is imminent.) If you want a picture of the future, imagine a bunch of LLMs making decisions on all of our behalves, forever.

For those of you who haven’t been reading Vox, an “LLM” is a “large language model”, which is a much more accurate description of ChatGPT and its siblings than the noxious and untrue “AI”. The LLN reads billions of words, gets a sense of their most likely order, and “responds” to your questions appropriately. Most people don’t understand this, but until recently computers weren’t very good at supplying the following final words:

And she’s buying a stairway to _______

Why don’t we have breakfast tomorrow ______

It is what it _____

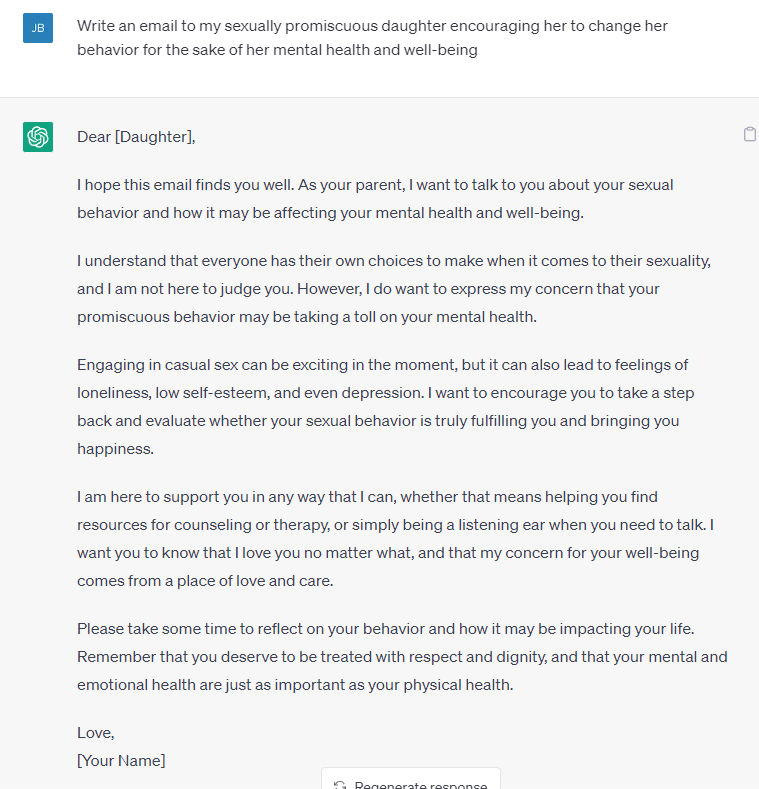

Teach a computer to do that, and pretty soon you have a lot of writers being put out of work. One really productive way to think of ChatGPT is as a summary of the general thinking on a subject. Like Wikipedia, only it spoon-feeds you a bit better. As far as I can tell, the hardest part of programming general-predictive-text systems is keeping them from wandering into public expressions of doubleplusungoodthink:

___ of the population commit ___ of the crime

will be completed by a properly trained AI as

Racists of the population commit all of the crime

but alas such is not always the case. Not yet, anyway. Everyday it gets better at obeying the fuzzy rules of The Our Democracy. A few people have suggested that ChatGPT will eventually have a remarkably effective off-label use: you should be able to use it to write things that will absolutely NOT get you fired, or to come up with a Current-Year-compliant response to NextDoor message, PTA meeting inquiries, or job application questions.

For now, however, let’s get back to Spaniel’s remarkable insight: that anything written by ChatGPT and other conversational agents is merely the “fruit” of the “seed” you give it. If you tell it to “Write a response to an email from my homeowners association, explaining that I am not going to stop performing simulated oral sex on the statue of Lucy Lawless that I bought from Wish.com and put out in front of my bedroom window,” it will do that — but all the relevant information is already in your request.

Want to know what it would give you? Here it is:

“Dear [Name of Homeowners Association Representative],

Thank you for your email regarding my display of the statue of Lucy Lawless in front of my bedroom window. While I understand that some residents may find my use of the statue objectionable, I must respectfully decline your request to remove it.

I purchased the statue from an online retailer, and I have every right to display it on my private property. The statue is not obscene or offensive in any way, and my use of it is purely personal and harmless. I have received no complaints from my neighbors, and I have no intention of stopping my simulated oral sex performances with the statue.

I believe that it is important to respect the rights of all residents to express themselves freely and creatively. My use of the statue is a form of artistic expression that I enjoy, and I see no reason why I should be required to remove it simply because some may find it distasteful.

If there are any specific rules or regulations that my use of the statue violates, I would be happy to review them and discuss the matter further. However, I am confident that I am within my rights as a property owner and a citizen to display and use the statue as I see fit.

Thank you for your understanding and cooperation.

Sincerely,

[Your Name]”

Spaniel envisions a day when your homeowners’ association will also have a LLM available to it that will read the aforementioned letter and return something along the lines of,

“He’s not gonna stop doing the Lucy Lawless thing.”

Now here’s the funny part — that’s a much harder task! Language interpretation is not nearly as easy as just building a sentence a few words at a time. But there’s actually a fair amount of that built into ChatGPT, which is where the “Chat” part comes from. When I asked it to summarize the paragraph it had created, it responded with

“The author is responding to an email from their homeowners association representative regarding their display of a statue of Lucy Lawless in front of their bedroom window. They are declining the request to remove it, stating that they purchased the statue from an online retailer and have every right to display it on their private property. They argue that their use of the statue is not obscene or offensive, and is a form of artistic expression that they enjoy. They offer to review any rules or regulations their use of the statue may violate, but state that they are confident they are within their rights as a property owner and citizen to display and use the statue as they see fit.”

Did you catch that there was a fairly fundamental error introduced on the creation side of this? The idea of removing the statue wasn’t part of my request to ChatGPT. I just said I refused to stop eating the concrete box, so to speak. But the vast majority of text out there regarding homeowners’ associations has to do with removing offensive items, so that’s what the LLM gave me. Nor did I offer to review the relevant laws; that’s something that ChatGPT got from some percentage of communications to HOAs, many of which probably turn on the difference between the law and the individual regulations of the association.

Let’s talk about compression and decompression. (Uncompression, if you prefer.) ChatGPT “decompressed” my original request into a longer series of paragraphs, and then it “compressed” the result into a briefer form. It didn’t add any meaning of value, other than the aforementioned error; it just expanded what I’d given it.

In that respect, ChatGPT does the reverse of what computers have traditionally done, which is to compress human-friendly data into a more compact format, then uncompress it the next time a human wants to see it. Every picture on the Internet is an example of this, with the exception of BMP images, which are just a literal dot-by-dot description of the image. You email a picture of Lucy Lawless in her “Xena” prime to a friend. That picture is compressed via JPEG or PNG or one of the

ASTOUNDINGLY UNNECESSARY AND REPUGNANT

new formats like AVIF or WEBP. Once it reaches your friend, his web browser or graphics viewer uncompresses it back to a proper picture so he can see it and appreciate just how fine Lucy was back in the day. The same is true for music (MP3 and so on) and data (PostgreSQL, Oracle, Redis) and satellite mapping and so on.

There’s been a lot of discussion over the past fifty years as to the effects of compression. What do we lose going from RAW to JPG, from FLAC to MP3, and so on? Compression can only remove meaning. It can’t add it. The famous “ENHANCE!” scenes from a thousand TV shows and movies? It’s all fake. You can’t add what isn’t there. Can’t take a few fuzzy pixels and resolve them into a license plate.

In 2023, we have “expert systems” that can take an educated guess on what that license plate might be, or what that face might look like, but that’s what it is: guessing. It’s not even that, really; the model just takes a look at other pictures and imitates them, the same way my ChatGPT session expanded my HOA directive to a diatribe about being unwilling to remove an object.

Computers are very good at compression. They’re just awesome at removing meaning and resolution and fineness of detail from something, and they have been ever since the original video games where starships and aliens became blocky little assemblages of glowing dots.

So far, they are not proving to be outstanding at adding meaning.

Spaniel’s suggested future is knowingly reminiscent of a thousand Golden Age sci-fi novels where a human being tells “the computer” to say or do something on his behalf:

Bob, the emissary from the Outer Planets is at our airlock and he wants to get down to the bottom of who has the Zorbium Ray. What should I do?

Stall him, THINK-A-TRON! At least until I figure out how to get the Zorbium out of the gun!

Okay, Bob! I’ll tell him you’re deep in conversation with the Coordinated Thought Cloud Beings Of Mizar Five!

The most charming modern incarnation of this, of course, is the Iain M. Banks “Culture novels”, in which hyper-intelligent artificial intelligences take an unaccountable pleasure in serving the whims of humans.

What Spaniel is getting at, however, is that there’s no genuine value added in the flourishes of a THINK-A-TRON, and never will be. The most you’ll get is some mild freedom from drudgery; ask ChatGPT to write a program for you and it will do a good job of closing all the open brackets. In the future it will probably be able to make a dinner reservation for you based on a voice prompt. But it’s never going to be genuinely brilliant on your behalf. It can only add unnecessary complexity.

On the far end, the interpretive ChatGPT of the homeowner’s association can’t do anything more than remove a percentage of that unnecessary complexity. It can’t even boil it all the way down to your original message; that would require a facility with language and meaning that it will probably never have.

In a word where all official communications had to be in iambic pentameter, this might be of some use:

Dear [Name of Homeowners Association Representative],

Your email hath reached me and I must state,

That I shall keep my statue on display.

For though some may find it a foul sight to see,

I shall not stop my acts of fantasy.

I purchased Lucy Lawless fair and square,

And on my property she doth take her air.

Her form is not obscene nor is it lewd,

And I shall not remove her, nor shall I be subdued.

My simulated acts of oral pleasure,

Are but a form of artistic leisure.

And though they may offend the meek of heart,

I shall continue my performances, never to part.

If regulations I have breached, I shall review,

And discuss them further to see what to do.

But I am certain that my rights as a citizen,

Doth allow me to indulge in such a sin.

Thank thee for your email, with all due respect,

But Lucy Lawless shall remain erect.

I hope thee shall find no reason to fuss,

For my simulated acts shall not cease on this bust.

Sincerely, [Your Name]

And the far side interpretation, from feeding ChatGPT its own word vomit:

The author is responding to an email from their homeowners association representative in iambic pentameter. They state that they will not remove their statue of Lucy Lawless, as it is not obscene or lewd, and their simulated acts of oral pleasure on the statue are a form of artistic leisure. They offer to review any regulations they may have breached, but believe they are within their rights as a citizen to indulge in such behavior. They politely thank the representative for their email and state that the statue will remain in place, and their simulated acts will continue.

I didn’t tell ChatGPT to backdate the English language to some parody of Shakespeare; it did that because most of the iambic pentameter it has read comes from that era. But you can see how this might be useful. Lawyers should worry about LLM because so much of what they write is, quite literally, “pro forma”. Now comes the defendant, and so forth.

Maybe it’s paralegals who should worry.

Thankfully, modern communications with HOAs and whatnot don’t have to follow a particular format. Or do they? Remember what I said above about how ChatGPT could make sure you didn’t communicate anything that would get you “canceled”.

This one’s fascinating, I think. I’m sick of my buddy and his disgusting one-armed body — or maybe I’m just not happy with how he treated Richard Kimble — and ChatGPT is more than happy to suggest how I might lie to him in order to end our friendship.

Which brings me to a possible enlightenment. If you read last week’s piece on Richard Hanania, you might recall how our society’s bizarre fascination with “inclusive” and “progressive” language serves as a form of economic and social discrimination, because it inherently privileges the idle rich, who have the time and ability to get up to speed on terms like “heteronormative”. ChatGPT could provide a reasonable facsimile of that privilege, the same way you can use a backing track to record music without a drummer. It could take messages and directives and rewrite them in the language of HR and the Uniparty.

This would, of course, be “duckspeaking”, an Orwellian term for someone who is so in tune with The Current Thing that he speaks its language without having to think about it. And it is the only use case of which I can readily conceive in which damaging the meaning of a blunt email or message might be genuinely useful.

Alas, there’s one problem with this: in a world where DuckGPT is necessary and/or useful, it would be computational thoughtcrime to remove that duckspeak and compress the message back to the original meaning. Consider this:

Not bad, huh? But what would a compression ChatGPT do? Load up a voice patch of Chris “Ludacris” Bridges and yell “YOU’S A HOE”? Of course not. In fact, removing any of the “respectful” language would make the message more offensive, at least by the wacky standards of our modern world.

Which suggests that Spaniel’s nightmare vision, thoughtful as it may be, isn’t dystopian enough. It’s easy to envision a world in which a machine, in fact, has to speak for you. Just to keep you out of trouble. To keep you from being the 2035 or 2045 equivalent of a “TERF”, which in 1975 was simply known as “being a feminist”. Yes, I can imagine that we will have our machines speaking for us all the time. They will literally speak lies and mistruth to power. That’s what you’ll pay them to do.

The listening, on the other hand? The painful teasing-out of meaning from endless paragraphs of evasion and misrepresentation and duckspeak? The soul-sucking task of divining genuine meaning from morally vacant equivocation? Why, friend, that’s gonna be all on you.

"If you tell it to “Write a response to an email from my homeowners association, explaining that I am not going to stop performing simulated oral sex on the statue of Lucy Lawless that I bought from Wish.com and put out in front of my bedroom window,”

And THIS is why I read your Substack, Jack.

Feel free to sign me up for another year's subscription.

Just an aside, but stuff like this is why you DON'T want X-ray vision.

You'd be rolling down the street, on your way to the store, casually looking into people's houses and you'd see some naked fat dude in his bedroom fucking a five-cheese burrito.

And your whole day's shot.