(Last) Weekly Roundup: The Plural Of Trash Anecdote Is Garbage Data Edition

The Internet got pretty excited last week about a piece entitled COVID-19: Evidence Over Hysteria, written by former Mitt Romney staffer and "Silicon Valley growth hacker" Aaron Ginn. The article went, ahem, viral because it said a lot of things that people desperately wanted to hear, whether they are true or not. The ensuing backlash was fairly weapons-grade and it came from a lot of people with letters after their names so the article was unpersoned by Medium and banished to the Internet ghetto of ZeroHedge, which is where you can read it at the above link.

I don't know enough to say whether or not Ginn is right --- but I am also fairly sure that Ginn also doesn't know enough to say whether he is right. My purpose here isn't to discuss the article, but rather to suggest that much of the world is being run into the ground by people like Ginn thanks to what I can only characterize as a shared and immensely powerful delusion. Let's call it the fake law of isomorphic data, or "Ginn's Law" for the moment.

The first clue that Ginn is out of his depth appears so early in the essay that it would be above the fold here at Riverside Green:

I’m quite experienced at understanding virality, how things grow, and data. In my vocation, I’m most known for popularizing the “growth hacking movement” in Silicon Valley that specializes in driving rapid and viral adoption of technology products. Data is data. Our focus here isn’t treatments but numbers. You don’t need a special degree to understand what the data says and doesn’t say. Numbers are universal.

Emphasis mine, because "virality" isn't a real word. It's a Silicon Valley Culture Word coined to describe the rate at which something "goes viral". This Ginn fellow considers himself an expert on "virality" and, in a truly staggering case of Midwit Thinks He Understands The Subject Because He Understands The Analogy, decides that he is also an expert on how actual viruses are spread. This is worse than my current delusion that I would be a great SpecOps soldier because I've become a half-decent CounterStrike player, which is frankly stupid; it's more like me becoming a decent chess player and figuring that I'm ready to get in a time machine and face off against Machiavelli in the ol' Florentine Republic.

Remember this delusion --- that you understand something because you understand something analogous or related to it --- because you're going to see it again, and shortly.

Ginn tell us that "numbers are universal". He could also say that many equations are universal, or at least widely spread. Here comes a brief math lesson, delivered by an English major...

Some math problems have solutions which "scale" pretty well. Consider these two:

5 + 5 = ?

1,234,955,332 + 4,534,843,201 = ?

The answer to the second problem (5,769,798,533) is over half a million times bigger than the first answer (10, dummy!) but it doesn't take us half a million times as long to answer the first problem as the second, even if you're a first-grader.

Now let's look at two more problems. They're both the same problem, actually.

Problem 1: A salesman has to visit three cites today. Atlanta, Boise, and Chicago. Atlanta is 150 miles from Boise and 200 miles from Chicago. Boise is 300 miles from Chicago. He can start in any of the three cities. What's the quickest route?

As a normal human being, you might decide to solve this in a hurry by trying all the combinations. Let's do that:

ATL -> BOI -> CHI : 150+300 = 450 ATL -> CHI -> BOI : 200+300 = 500 CHI -> ATL -> BOI : 200+150 = 350

There are three more combinations, but they are basically the same thing in reverse, so there you go! That takes a minute to do, doesn't it? Surely there's a way to do it quicker, right?

There isn't. You have to try all six potential combinations. Even if you're a computer.

Which leads us to the second problem, which is the same as the first, except now that our salesman has thirty-one cities to cover. Surely there's some kind of shortcut to do that, right? Not quite. For a long time, mathematicians thought that you would have to go through all potential combinations --- which is pretty close to the very big number of (31-2)! which is also 8222838654177922817725562880000000 or something like that, I used a Web calculator which loses interest after a certain number of digits.

My choice of 31 cities wasn't random; it comes from a 1962 contest held by P&G. The winner could get $10,000, which would buy you a Porsche or a decent home. If P&G were to put the same contest up today, however, they'd have to give the money away a minute or two after they did so, because there is now a technique called "Branch And Cut" which combines two earlier methods ("Cutting Plane" and "Branch And Bound") to perform the solution much more quickly. These two techniques work by eliminating obviously stupid choices (like starting by going from New York to Seattle and back to Philadelphia) each step of the way. The technique of "branch pruning" is also used by computer chess programs, which simply "fail to consider" any moves which are obviously poor as they "look ahead" towards a strategy. Human beings have a little of this built into us; only the completely insane thing it would be a good idea to start a business meeting by defecating on the table or screaming their own name a dozen times, even though there is possibly a "future" in which doing so actually sets up a favorable resolution to the meeting eventually. It's also why chess grandmasters could beat good computer programs for a very long time; they had a better sense of how to sacrifice pieces for a victory down the road, so they would follow "branches" of strategy which the computer might immediately discard as a waste of processing time.

Anyway, even the very best programs don't solve the "traveling salesman problem", or TSP, very quickly. More importantly, as you add cities to the list, their calculations tend to also increase in time. In theory, the navigation programs in your car and phone are "solving" TSP, but they are actually using mathematical shortcuts which provide a very close approximation to the correct answer, not actually solving the problem, in much the same way that most of us can guess the answer to some pretty big math problems without being spot on.

(For much more, read this, why dontcha.)

Anyway, one of the "holy grails" of mathematics is an equation or process which quickly solves the TSP regardless of how many cities are involved. This magical equation would solve a million-city TSP about as quickly as it solves a 100-city one, the same way that the calculator app in your computer solves pretty much all multiplication programs in nearly the same amount of time.

Now here's where it gets crazy. There are a lot of problems out there which are just as hard as the TSP for a computer to solve, and most of them are isomorphic to TSP. Which means that they can be "translated" into, and out of, TSP. Here's an example of such a problem: it's the same as TSP after you apply some complicated transformations. (Don't ask me how: I'm just an old guy on a BMX bike.) So if you could figure out a way to easily solve TSP, you could also solve this problem, and many like it.

Let's start working our way back to Mr. Ginn and his "virality", shall we? For obvious reasons, mathematicians are eager to reduce the number of potential problems out there to a comprehensible number. Therefore, it is common for a mathematician to look at a new problem which arises from real or theoretical studies, quickly prove that it is isomorphic to TSP, and then leave it alone so they can get back to working on TSP.

Now, do you remember when you were a kid and you heard an older kid say something really cool and so you decided to say it yourself? That kind of attitude is common among scientists, who are children in many ways. So all the people who work in lesser disciplines than pure mathematics --- and they are all lesser disciplines, when you get down to it --- like to consider things as isomorphic, too. Much of "data science" consists of using the same tools to hack on widely different sets of data. For instance, "machine learning" is super-hot right now in the job market; a few years ago I was doing that for a certain very large bank before I wandered off the job to go World Challenge racing. All the popular examples of machine learning you'll find on the web work on images. The images are reduced to numbers, and then we use number-crunching algorithms on the numbers to "recognize" things. (Here is a great example of this.)

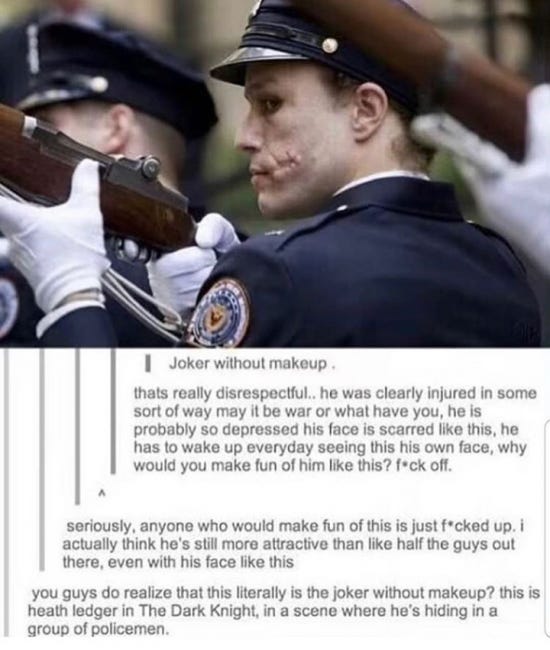

It's very easy to use machine learning to distinguish between photographs of a firefighter and photographs of policemen. This leads people to conclude that machine learning is very smart. Allow me to show you a picture that a machine learning algorithm will unfailingly identify as a police officer:

Ah, but that's not a police officer, is it? That's the Joker. Viewed another way, it's an actor. In no way is it a policeman. You can tell the difference, but the machine cannot. Even if you don't know that movie (The Dark Knight) there are a lot of cues that you're looking at something wrong here, from the photo's framing to the slight unreality of the scar tissue on the face. The kids on 4chan are having all sorts of fun with this image, and the fun is based on the idea that not everyone is smart enough to be in on the joke:

We would say that the people being trolled here are naive, wouldn't we? That's the problem with machine learning: it's fundamentally naive. It doesn't consider that an image might be deliberately subversive or mis-represented. It simply reads the image and notes similarities. In theory, a sufficiently powerful machine-learning system might come to recognize all the signs of subversion and mis-representation, to the point where it would be as good as a person. It will take some time. People are a lot better at processing images than they are at chess. Machine learning will be naive for a long time to come --- frighteningly, it will be naive long after some equally naive people have decided to hand the control of our planes, trains, and automobiles to it. (What happens next? I wrote a story about that.)

Data science, like machine learning, is naive --- but it is even more deeply naive in the sense that it absolutely relies on purity of data. There is no error-correction algorithm out there which can fully account for genuinely compromised data, the same way that people who have gone on "bad trips" courtesy of LSD or ecstasy or edibles report back that they were unable to distinguish reality from their own internal nightmare.

Our current Silicon Valley fetish for data science grew out of a desire to do something with the firehose of data presented to their servers every millisecond. There are billions of people generating thousands of data events per day. Every Like, every flick of a mouse, every physical motion while carrying a GPS- or heart-rate-enabled device. The data is immense. I'd be willing to bet that the human race generates more image data on a daily basis now than it did at all times in history combined up to, say, 2000 or so. Think of all those high-definition phone cameras and webcams. Their endless stream of products and sex and animals doing funny things.

Given access to this torrential and almost entirely truthful stream of data, the algorithms can perform amazing tasks, although our society being what it is we mostly use the algorithms to sell or shame, depending on how we make a living. It knows when you've been sleeping, it knows when you're awake. Facebook and Google could easily determine when you're about to kill yourself, but they choose not to. Instead, they use that acumen to figure out when you need a new razor blade.

Data science is easy because there's so much data. Give me access to your browsing history and even an Atari 800 could probably figure out a lot about you. Many years ago I operated the Web proxy for a few small businesses. I saw what they looked at and I could tie it to individual computers. I ended up learning a lot about those people, trust me --- and I don't have the kind of on-demand data access capacity of the Google Cloud.

It's no wonder that many data scientists consider themselves to be quite intelligent. I thought I was a decent basketball player in fifth grade, until I had to play against schools where not everyone made the basketball team. Data science is so easy, and the tools are so effective, that its proponents start to think it has universal application. But it doesn't.

Witness, if you will, Aaron Ginn. I can't fault a lot of his methodology. He looks at the data being presented, he hacks on it a bit, he looks for patterns, and he says some things which are both interesting and reassuring. Here's the problem: when it comes to the Virus Which Cannot Speak It's Original Name, all the data is trash.

Have you taken a COVID-19 test? Has anyone you know taken such a test? For most of my readers, and for myself, the answer is "No." Did you know that not all of the tests work? Did you know that the hospitals are seeing insane divergences in the percentage of positive results... like a 10x divergence? In some places, one percent of people who take the test are positive. In others, it's ten percent. We aren't sure that it isn't just the tests themselves. Let's not get into the fact that the methods of selecting people to take the test vary widely across the globe: some cities test everyone, others test people who drive through a special queue at the hospital, others tell everyone to stay home so they don't overwhelm the system.

There is no amount of statistical smoothing which can account for these variations. You're not flipping a hundred coins and recording the results here; you're adding up your children's birthdays, picking the IP address that is that number divided by the last number of your stepfather's F-150's VIN, finding the online community closest to that IP address, then asking the people in that community who are frightened of ducks whether or not they have tattoos. The interference is too high to get over; the signal-to-noise ratio is too low to see.

What frightens me about Aaron Ginn is that he didn't bother to consider any of that before he started writing his paper. He just treated the data as being utterly trustworthy --- even the stuff from the Chinese government. It makes me wonder how many other decisions this powerful and influential fellow has made in similar fashion, and with similar ignorance of the corruption infesting his source data. Is that why Romney lost? Is Ginn's obtuse stupidity the flapping butterfly which changes the world?

I'm not as frightened of COVID-19 as I am of its knock-off societal effects. And I'm not as afraid of those near-term knock-on effects, even the so-called Boogaloo, as I am of a future in which people like Aaron Ginn have all of the decision-making power. I fear a world where some blithe idiot looks at numbers on a screen, says something about "all numbers are numbers," then proceeds to destroy humanity with the resulting assumptions. Garbage in, garbage out. Which is funny --- until you find yourself eating garbage.

* * *

Last week, I wrote about the right time to buy a Corvette and a free Ross Bentley seminar. Since we are "reprinting" some of our older magazine articles to give people more reading material, I also posted my Bandit and Continental pieces.