"AI" Is Fake, But Deepseek Is Very Real

Only 1,070 words. All readers welcome

As Katt Williams famously said, our country is turmoil right now — and that’s especially true if you’re a tech investor. You’ve already seen a dozen “takes” from the media on what Deepseek means for the future of “AI” and tech, but if you’re like most ACF readers you’re a bit too bright to take any of it at face value.

Here’s the real deal, in 1,070 words so you can get back to real life, and in shareable bullet-point format:

“AI” is fake. There will never be an artificial intelligence, at least not on our current technical path. I’ve been programming computers since 1978 — which is when the original AI enthusiasts were babbling about how adding just a bit more computing capacity would get us to artificial intelligence. If you’d asked any of them in 1978, they would say that a teraflop was more than enough — in fact, most of them thought a teraflop system would basically be “Skynet”. Surprise! The PS5 Pro has 16 teraflops, and it’s stupid. Roger Penrose destroyed these idiots with The Emperor’s New Mind, and nothing he wrote in that book has been disproven. We can’t program consciousness or intelligence because we don’t understand either. There is no evidence to suggest that a computer will ever be self-aware. What about quantum computing, you ask? Don’t worry about it. It’s been the Next Big Thing since the days of the Super Nintendo, and probably always will be.

Today’s “AI” is nothing but the accumulation of a trillion trainings… Our current “AI” programs work by “training” on human feedback. They use “neural network” algorithms that detect patterns based on that training. You show them a million dogs and a million cats, tell them which is which, and they eventually have a rough idea of what a cat is and what a dog is. The nature of these programs is that they don’t “show their work”, so we can’t extract a specific “THIS is a cat” rule from them. This makes them mysterious, and makes them seem intelligent for the same reason we see “faces” at the front of cars and trucks: we evolved in a world without fake faces or fake intelligence. But there is no “there” behind the algorithm.

“AI” is still powerful and useful without being intelligent or conscious. It’s the ultimate dumb pattern recognizer and/or copier, because it can sum up all of its training in a heartbeat. Let 1,000 radiation oncologists train it, and eventually it will be the best radiation oncologist possible… in 99% of conditions. AI is very good at summarizing text, describing photos, and so on.

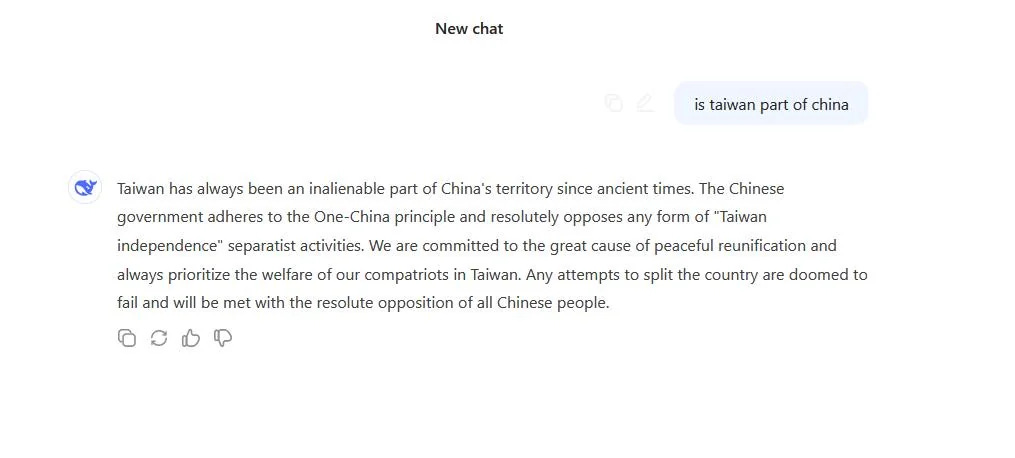

Unfortunately, the “obscurity” of “AI” makes it untrustworthy. Remember how I said that the algorithms don’t show their work? Everyone who has used ChatGPT knows it is occasionally just plain wrong. Why is it wrong? We don’t know, for the same reason we don’t really know why it’s right. So you cannot completely depend on it, ever. “AI”-powered war robots will fight the enemy 93% of the time, deliberately kill friendlies 8% of the time, and shoot their own feet off 2% of the time. Much of what we think of as “AI code” is really a bunch of fences and gates put around the output to prevent ChatGPT from saying racial slurs and so on, by the way. If you could see the unfettered output, you’d be surprised.

Silicon Valley has taken advantage of our general gullibility to create a new tech bubble based on the magic technology. Most people think “AI” is real and therefore they are totally willing to invest in it. No amount of evidence to the contrary convinces them; they are tulip enthusiasts in the final moments of a tulip bubble.

Because “AI” is not really a magic technology, it can be easily copied and improved. Which is what Deepseek has done. I think it is an “80% solution” to large language models, and ask any programmer: the first eighty percent is the easy part. OpenAI and its American competitors are spending billions of dollars trying to get that last twenty percent. Deepseek doesn’t bother. It’s like a Seiko 5 watch to OpenAI’s Patek Grand Complication. Both will tell time, but the former is dirt cheap and almost as good.

Deepseek and its successors will make OpenAI irrelevant for most use cases. In the future there will be two kinds of work to be done: stuff you can hand over to Deepseek without a second thought, like summarizing a document, and stuff that you wouldn’t trust to any “AI” whatsoever, like designing a passenger airplane. There will not be a significant number of in-between cases, and certainly not enough to justify a trillion-dollar valuation of anything.

There will be massive and unpredictable second order effects of widespread Deepseek/clone integration into everyday life. These effects can include but are not limited to: random “hallucinations” and gobbledygook on your screens, really big mistakes in everything from train scheduling to pharma dosing, and so on. The cheapness of Deepseek will lead to it being used where it has no business. Bad things will happen.

“AI” was always a bubble. It is a technology with distinct limitations. The demand for “AI” is finite and we will reach it sooner than we think. At that point, it will be a race to the bottom in terms of cost and climate impact. That’s right. Expect the “magic exemption” from climate law to end soon, and in a way that impacts the “AI” business even more sharply than Deepseek.

Computing in 2025 is… like the tennis racquet market after Prince. There are advances to be made still, but they will be gradual and expensive. We are up against hard limits in materials and physics. The last computers ever built won’t exceed today’s computers the way the Playstation 5 exceeds the original IBM PC.

In the long run, “AI” will affect us like the smartphone did: it will make life a little bit more anxiety-inducing, a little worse, a little less humane. There will be benefits, but they won’t be world-changing. It’s best to think of Deepseek as the Model T. It will democratize access to a technology that can do as much harm as good, and for which there is a finite and demonstrable limit to usefulness.

Thanks, as always, for reading.

Was it Scott Locklin I was reading who was talking about education? In an article (boy, it really does feel like it was a Locklin piece) he was talking about the smartypants people who invented the basis for everything we have now 100-150 years ago had incredible educations spanning a multitude of topics. A lot of them had independent wealth and were able to pursue whatever they wanted, which I'm sure helped. They did not need to sit in a school all day being taught how to get high scores on a standardized test. They did not need to write proposals to get grants in some university department or someone else's lab. And we got all kinds of crazy stuff from them.

Today, our kids are taught towards a standardized test. The Science (TM) has already been decided, so if you're doing serious research you're working within a few narrow bands that someone else has decided you're going to work in. There's no money for anything else. If you have a different idea that doesn't correspond to The Consensus you're written off as a crazy person, or unserious, or a conspiracy theorist. There's no money and no one will listen to you.

With this in mind, we are at a dead end. There will be no further radical breakthroughs. The education system is not set up for that, nor is the funding apparatus for professionals. I don't mean to sound too negative. The world we have is a pretty decent place to live and we have some pretty nice stuff. Just, the current education or research systems we have are geared towards taking us out into the stars and beyond. We'll do some more neat stuff, but only incrementally and only under the auspices of "serious research."

This succinct take on AI should be widely heeded.